Effective Candidate deduplication is one of the big challenges when you want to manage and automate your staffing and recruitment efforts in a world class Applicant Tracking Solutions.

The bigger your candidate database becomes, the more important is the ability to effectively deal with the duplicate data

Read on if you want to prepare yourself for the pitfalls

When deduping candidates in a recruitment solution, consider this:

- 1. Help users search properly before they create a new candidate. Don’t use the “I’ll-slap-you-over-the-fingers-if-you-do-this-again” approach. Help them, guide them to avoid that they create a duplicate

- 2. Job applications from a web-site should be scanned before they are being processed. Only this way can it be updated with historic information from existing candidate records if it exists.

- 3. Processing candidate deduplications on data already existing in your database is required to clean up existing data. Scheduled or ad hoc deduplications are also a necessity to provide an underlying safety net for duplicates which may pass the 2 steps above.

- 4. Engage your recruiters in the clean-up process. Let them review and merge records on the fly when they come across duplicates in your database; this creates ownership of data and helps you share the workload.

- 5. Consider also that a candidate may be one of your customer contacts.When setting up your deduplications you must be able to segment your data and make sure you are matching the relevant data: imported data against existing data, candidates in a given country against themselves, or candidates against your customer contacts etc.

Searching before you create

Better to prevent than to fix afterwards? Yes, in an ideal world it would be great if you could prevent the duplicate from being created in the first place. There are many ways to facilitate dupe detection or even dupe blocking.

Start outlining how new Contacts and Candidates are being created. And for each entry point: At what point should the search be done? Before all data is entered, or after the data has been entered?

Your ATS most likely have many entry points, and you will end up having custom rules for each data entry process. E.g. you cannot block applications coming from the web – You won’t get the new data. You cannot blindly link new data on e.g. an Email match – some records may not have an email, and others may have an alternative email etc.

It is great to do as much dupe preventions as possible. But, make sure you also have a strategy and/or solution in place for dealing with those dupes which still gets through your front-door checks.

Start by getting a solid candidate deduplication process in place for data already stored in your database. regardless of whether they are entered 3 seconds ago, 3months ago or 3 years ago.

Start by getting a solid candidate deduplication process in place for data already stored in your database. regardless of whether they are entered 3 seconds ago, 3months ago or 3 years ago.

This will be your back-bone protection.

Then start putting front-door – dupe prevention/detection in place for the most vulnerable data entry points.

Example: Effective Dupe Prevention and Client Search – All in One!

Scanning new resumes before processing

Different Applicant Tracking Solutions, like TargetRecruit, has many ways for you to enter new resumes. The import of resumes, results in the creation of a candidate records, or of the update of an existing candidate record.

Each resume should be checked for uniqueness before it is being used in any business process.

When recruiters are importing resumes e.g. from emails, the matching should be immediate. And it should give the user an option to merge the new data with data from an existing candidate, or accept the resume as a new candidate.

Most ATS will check for a matching email, and if the email exists, update the existing candidate and e.g. add the new resume data.

In many cases this may be sufficient, but it doesn’t work if the candidate submits the resume with an alternative email address.

Example: Resume import with dupe detection feature

Candidate deduplication – existing data

Candidate deduplication is not just about dupe prevention

Dupes already exist, and new duplicates will always appear.

If you put an impenetrable wall around your database – you also make it almost impossible for anyone to enter new records.

Accept the fact: Dupes will appear and they will keep accumulating.

Better is to have a 2-level approach.

Make sure you have a user-friendly mechanism which detects the more obvious duplicates upfront.

Combine this with a more thorough process, running in the back ground, to find duplicates which pass your front-door check. Such process will also help you detect duplicates which already exists, and duplicates which may come from other sources. Those that are not protected by your dupe prevention solution.

Actually, we recommend to start by getting a solid deduplication process in place for data already stored in your database. Regardless of whether they are entered 3 seconds ago, 3months ago or 3 years ago.

This will be your back-bone protection, and it will help you remove existing duplicates.

It will also, if run on a regular basis (daily/weekly), find duplicates which are not detected by the entry check functionality

Once that is done: Start putting front-door – dupe prevention/detection in place for the most vulnerable data entry points.

Read more: Top 5 reasons why duplicates exists and keep accumulating

Engage the end-users, collaborate

Having duplicates is everyone’s pain, is cleaning the data not something for everyone to take part in?

Do you have a strategy for your data stewardship?

Having and Admin going through lists, reviewing and merging candidates in pairs or in bulks, may seem like the most obvious solution to cleaning up duplicates. But some duplicates may be better off being resolved by the end users.

Information about potential duplicates should be shared with the end users. Either directly on their candidate page details, their dashboards, or through simple lists for them to review.

You may now want your end users to merge and thus delete records. You could have business processes which makes the deleting tricky.

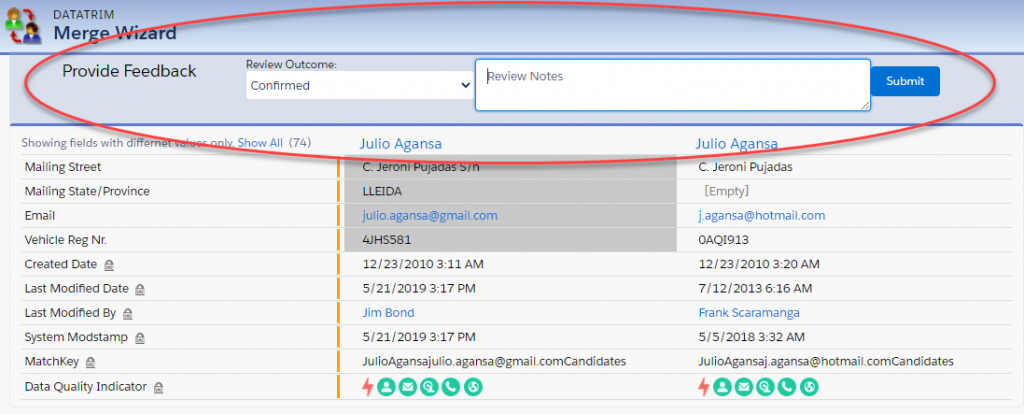

In this case you can still leverage the collaboration idea. Instead of merging, the end-user can provide feedback. Decide which records to keep, and eventually leave a note regarding the merge.

Your Data Steward can then review and merge these duplicates based on the feedback provided.

Segmenting your data

Working with a database containing companies, customers, prospects, business contacts, candidates etc. automatically defines different sets of data.

The settings used for matching e.g. company records may be the same no matter what type of company you store. But the action you want to make to the potential duplicates may be different.

If e.g. you have a customer and a prospect record you certainly want to keep the customer record as your surviving record, right?

For a Candidate with a placement matching a candidate without a placement, you want to keep the candidate with the placement. But what if none of them has a placement, would you then keep the most recently created, or the most recently updated one?

-And for candidates which also are business contacts? Do you really want to merge, or do you just want to link the records together to illustrate the relationship?

Plan for how you want to merge, and which data you trust the most. Then use these rules to go back and break your data into smaller parts, where each type of duplicates can be treated the same way.

Read more: Merging Records – Survivorship considerations

Also segment your data if your database is big.

Segmentation will help you prioritize and process the records which are most important first.

You may also think about splitting the database by geography/business regions. Multiple Data-Stewards can then work, simultaneously, on then different sets of records for the review and merging process..

In any case you should not consider your deduplication as a black box where you put all your data, and merge all duplicates being found.

A structured process requires the ability to segment your data, so that you can process data in segments and clean up your data consistently.

Summary

Candidate deduplication in an Applicant Tracking Solution is more than just preventing duplicates from entering.

There are different elements to consider, including How? Who? When? and What?

In addition, as previously discussed matching – finding duplicates – in a database of candidates is not the same as matching – finding duplicates – in a business contact database.

Read more: Deduping Candidates in a Recruitment Solution

DataTrim is a certified salesforce™ ISV partner who provides deduplication solutions for salesforce.

With years of experience in performing deduplicates on client databases, DataTrim has dedicated matching functionality for use with candidate deduplication as well as integration to TargetRecruit, JobScience and other ATS built on the salesforce™ platform.

Contact us to learn more or read more at: www.datatrim.com

Contact Us for more information

DataTrim helps companies and organizations worldwide in improving and maintaining a high level of Data Quality.

DataTrim improve the reliability completeness and consistency by applying a set of data cleaning treatments which is called The Data Laundry.

The Data Laundry solutions and services adds experience based data cleaning processes to lead management, marketing automation, customer support and account management processes in salesforce and created direct impact on the day-to-day usage and productivity in a simple-to-use, collaborative and cost effective way.