Fuzzy Logic – Probabilistic Matching – Blocking and Scoring?

We are often asked about our technology:

- Do you use Fuzzy Logic?

- Are you using Probabilistic Matching?

- What’s your process for Blocking and Scoring?

- etc.

We normally take pride in protecting our end users from having to understand any of this, and for those who would like to take a deeper look: Record linkage on Wikipedia may be a starting point.

But to be short the answer is, YES we do use Fuzzy logic, YES we do probabilistic matching and YES we do have algorithms in place for Blocking and Scoring, -but so does everyone else because there is not one single clear definition of what these terms contain when it comes to the implementation of them.

No, look for Completeness, Accuracy and Speed

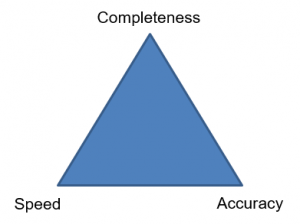

The nature of any deduplication problem lies in optimizing the coverage of 3 needs which 2-by-2 becomes the opposing combination to the 3rd.

- Completeness

- Accuracy

- Speed

If a solution is capable of finding all duplicates, without giving ‘false’ or negative dupes in no time – then you have the optimal solution.

Unfortunately the 3 elements aren’t combined easily.

- You can imagine a fast solution, which find exact duplicates, but being fast means that you may not get all duplicates (completeness).

- To get all duplicates in with a high level of accuracy even a computer will need a lot of time.

- Identification of all duplicates in limited time (speed) can only be achieved by compromising on the accuracy and accept that an amount of the identified duplicates are ‘false’ duplicates

.

In practice this can be addressed in various ways:

Simplify the Dupe definition: If dupes are easily identified e.g. has same email, then a solution can be fast and accurate although it is of cause not complete as many duplicates will escape this simplicity. To improve this approach you can then add criteria (Match Keys) e.g. Name and Mobile, Name and street etc. but the more you add of these match keys – the more slow will your solution become.

This approach is very common and will take care of the most obvious duplicates in any database. The factors which will make this approach fail, is lack of data normalization and standardization as well as missing data, which all will have a negative impact on the result.

By multiplying the number of Match Keys you will achieve completeness with regards to identifying all potential duplicates in your database. With many potential duplicates the Blocking and Scoring algorithm becomes the critical factor as to eliminate and exclude duplicates which after closer review turns out not to be duplicates after all. E.g. you can have 2 records with the same email (info@datatrim.com) even though the 2 contacts are not the same.

If in addition; your time is limited (computer processor time) your Scoring and Blocking algorithms will provide a level of inaccuracy in your result and you will therefore experience a that you during your review process will encounter many ‘False’ duplicates.

DataTrim Dupe Alerts combines the best from the 2 scenarios above, and because DataTrim Dupe Alerts are processing on dedicated servers the computer power will emphasize on achieving completeness and accuracy in the deduplication process.

The User Experience is what matters!

In the end, it’s the user experience that matters, so test the solution.

With DataTrim Dupe Alerts you do not have to worry about which match keys that works best for you and only gradually improve your deduplication effort as you build your rules, it is all built in from day one.

DataTrim Dupe Alerts comes with an initial set of predefined Match Keys. We have, based on years of experience of matching large international b2b and b2c databases, identified and included a matching process based on best practice.

All you have to do is install it and Run!

To provide completeness in large databases we realize that records which may look like potential duplicates from an algorithm point of view but may not be duplicates from a business point of view becomes a natural part of the matching result.

To provide a structural overview of the result, DataTrim Dupe Alerts has build in a Classification process which classifies each duplicate for you.

It provides you with a confidence indicator (Match Class) which allows you to prioritize your review process and enable you to review and merge your duplicates in an efficient way.

Curious to see how it works? Take a look at our 4 min demo: View Demo or install a Trial from the AppExchange: Install Trial