Initiating a deduplication of candidate records after migrating data from the previous Hiring, Staffing, ATS system, or otherwise after parsing numerous CVs you may end up with 1000’s

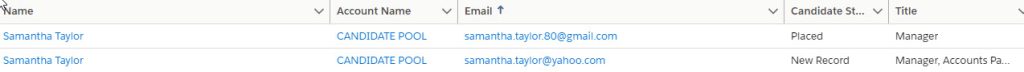

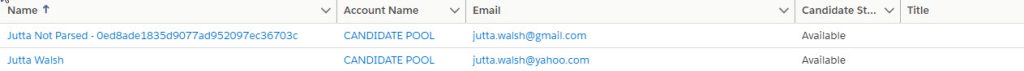

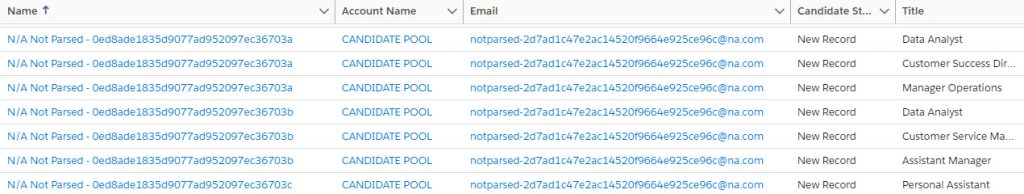

of candidates records which in a snapshot may look like this:

Different Candidate status’, and a mix of “bad” data.

You may setup rules to use the emails to prevent duplicate candidate records to enter the system, but duplicates may still pass.

The candidate may not have provided the same email address as last time. Or the parser did not find the email and so, the default value (notparsed-xxx…xxx@na.com) is populated in the email field.

This situation highlights 2 data quality issues:

- The missing data.

Obviously, the candidate records should be reviewed and the appropriate first name, last name and emails should be retrieved and updated.

This could include reviewing a lot of records. And if data has been migrated from more than one source, this missing data could be available on the other redundant – or duplicate records, which leads us to: - The duplicate data.

Duplicate data will always exist and a strategy should be put in place to prevent or address the existence of duplicates.

In this article we will share our best practices and how this will speed up you process of getting to a clean database.

At the bottom part of this article, you will get more information about how to perform deduplication on candidate records using DataTrim Dupe Alerts.

Before you start matching, think about what you want to do with the outcome

Deduplication of candidate records

When matching candidates, the result will be a large list of potential duplicates. If they all are “clear” duplicates (e.g. has the same email), you might think that you just want to merge them all.

Think again!

Before you merge, take into consideration that the merging process will, for each duplicate, take one record, which will become your New Master record (the survivor). Data from the other (the Dupe) will be moved across to the Survivor – where the Survivor is missing this information (fields are empty or checkbox not checked).

This means that if the Survivor doesn’t have an email, and the Dupe has an email, the merging process will carry the email across.

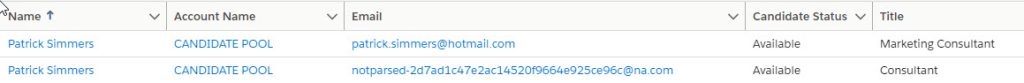

But because the CV parser always populated the email field with a unique email, then field on the Survivor is never empty, and so the Email on the Survivor will always remain – even if it is: notparsed-2d7ad1c47e2ac14520f9664e925ce96c@na.com

To effectively merge, you should think about how you would define your surviving records.

Candidate Status

If we ignore the “not parsed” values for a second, and take a look at the Candidate Status.

If 2 candidates were presented as potential duplicates, and one has the Candidate Status: “Placed” and the other “New Record” or “Available”

Which one would you keep?

There are 2 ways of addressing these scenarios.

Use a formula field to apply the logic

To perform a deduplication of candidate records, you can put all the candidates into one black bucket.

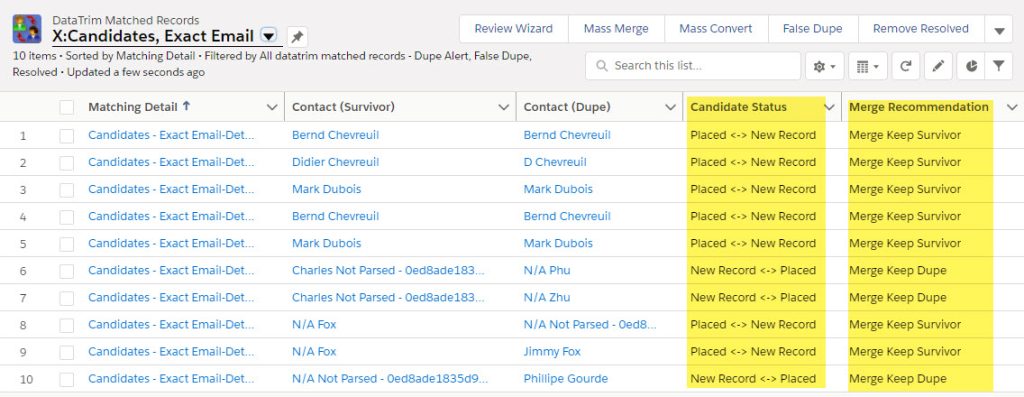

Perform the matching and then segment the outcome (Matched Records) before you merge.

Create a salesfore formula field to compare the Status values and thus determine which records should become your survivor.

Use this formula field in in your reviewing and merging process to make sure you are merging the duplicates in the recommended way.

- It could be implemented using an advanced formula which generates a “Merge Recommendation”, or

- You can make a simple formula which just displays the Candidate Status from the 2 Candidates. You can sort and filter by this field, to list the different combinations.

Which in a list view will look like this:

Segment data at the Alert Level

Alternatively, you can take one step backwards and create multiple subsets of data, and match them individually.

This way you can compare the different sets of records against each other and defining one set of survivorship rules per alerts.

Example: If you match the “Placed” Candidates against the “New Record” and/or “Available”, you can mark the dataset with the “Placed” Candidates as Survivors

Now ALL potential duplicates generated from this matching will all need to be merge with the records from the first dataset as survivors – logic, right?

Segmenting data at the mathing level is our recommended approach, as it logically makes more sense.

It allows the user to clean up parts of the data separately and focus on the most valuable candidates (e.g. those who are “Placed” first), then work the way down to the less important candidates later.

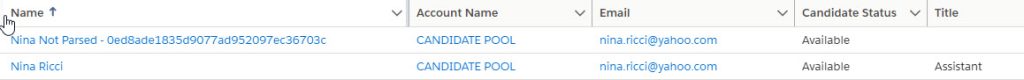

Not Parsed or partly Parsed Candidates

While matcing and reviewing your candidate duplicates, you may start observing candidate profiles with incomplete profiles being identified as duplicates of completely parsed profiles.

Similar to the Candidate Status above we recommend to create multiple subsets of data. This will make the merging process as simple as possible.

The strategy is to identify bulks of duplicates which can all be treated (merged) in the same way. -And keeping all the exceptions for last.

We can decide the candidate records into 4 groups:

Example

| Email Parsed | Email Not parsed | Total | |

| Name Parsed: | 150.000 | 1.000 | 151.000 |

| Name Not Parsed: | 350 | 500 | 850 |

| Total: | 150.350 | 1.500 | 151.850 |

To effectively deduplicate these candidate records, we would create 3 sets of data:

1. Initially we would want to find any candidate with the group of 350 (no name but with an email) already exists in the group of 150.000 (both name and email)

Selecting the survivorship rule to ensure that the candidate with the parsed name should be kept as survivor.

In the example above we would match the 150.000 against the 350

2. Secondly, we would match the candidates without a email but with a name (1000) against those with both an email and a name (150.000)

Selecting the survivorship rule to ensure that the candidate with the parsed email should be kept as survivor.

3. Finally, we would create the “Standard” Alert.

An alert where the candidates with an email all are matched against each other (150.350).

This alert may be broken furhter down into subsegments according to Candidate Status, geography etc.

a. You might want to split this into 2 alerts. One Where you perform an Exact match on the email, and another where you accept that the emails may be different.

b. And another where you allow the email to be different

The 3 alerts above will of case not cover all scenarios. The general data quality issue with missing data still will remain.

The non-parsed x non-parsed remains excluded, -these potential duplicates cannot be resolved.

The only way to address this is to work on the improvement of this data through reparsing, or manual data update. Once improved, run the matching again to verify if the updated records now are identifiable as duplicates of existing records.

Deduplication of Candidate Profiles using DataTrim Dupe Alerts

In the above the process has been described at a high level.

Take a deeper dive, and see how the alerts are beign created in DataTrim Dupe Alerts usign this Cheat Sheet.

Contact Us for more information

Don’t hesitate to reach out to our support team if you run into any question regarding this setup.

We will be happy to help.

Contact Support

Upgrade to the latest version directly from the AppExchange