What is wrong with the data we do have?

Now that you have an idea of how to Assess your Data Quality, you obviously want to make the numbers look better and Improving Data Quality.

Let me start by warning you: The road is long, never ending and with a lot of dangers along the way.

I the following we will give you some guidance, tips and utilities which will keep you in good company on the route to success.

“Good company in a journey makes the way seem shorter.”

– Izaak Walton

Focus Focus Focus

Once a database is being established, deployed and used, it will over time become a storage for everything, like your addict or basement. To start improving data quality: get organized!

- Get rid of stuff which is too old and just sits there because you got the space.

- Check out the boxes with worn out stuff, there might be items you can fix and reuse, as for the rest: dump it!

- For the rest, sort it, box it up, so that you easily can find it next time you are missing just that one thing you know you got somewhere….

The Low haning fruit

We put “Delete Stuff” on top of this list, as this is the easiest way to improve your overall Data Quality, and it makes the rest of the work a little simpler.

Simply by removing really bad stuff (Too old, no opt-in, missing critical data) you can quickly increase your average score with a few free points.

If you are not keen on deleting stuff, then at least flag these records as “archived”, so that you easily can exclude them in your business process and even in your Data Quality Metrics and initiatives.

Next, we look at the rest, and classify it in order to set priorities and optimize the time and effort involved.

Keep it simple

Ideally Quality Data are: Complete, Accurate, Consistent, Unique and updated recently. But the cost of this perfect scenario is that you check, validate and verify the data regularly, which requires big amounts of resource for checking LinkedIn for job changes, phone calls to get emails and opt-in, update of Industry types, company size etc.

To avoid wasting your time and money it is important to be able to prioritize your data and only spend money on the records which really matters and which are worth the investment, and in order to do this, you need to figure out which records needs which treatment.

- Identify your Business-Critical records. Highly Visible Accounts, frequently used Contacts, Leads with sweet-spot segmentation data etc.

- Identify which records has sufficient address information for assignment to sales team etc.

- Identify which records are emailable (valid emails, not bounced, opt-in etc.)

- Identify which records are contactable by Phone

- Identify which leads and contacts have Job Titles which are requested by your business teams.

- Identify duplicates.

Having a mean to which you can easily identify these elements, you can start making your priorities and put various cleaning processed in place.

Make your priorities

When using the guidelines above you will end up with realizing that you are facing 3 challenges:

- Data you have but which is not useful because it is mis-spelled, inaccurate or inconsistent.

- Data which is missing altogether.

- Data which is redundant (Duplicates)

A strategy for improving your Data Quality should therefor typically include the following elements:

Consistency:

Standardization and Normalization of existing data

Identify Fields, for which you want to be able to build workflow, run reports, use as selecting criteria for campaigns, or pass to other apps, like Dialing Apps etc. Typically, this is State, Country, Phone numbers, Job Title etc.

For these fields as well as any other Picklist fields (Company size, Number of Employees etc.) you should choose a standard or a set of values which you consider as your reference, and then start converting everything which isn’t conform with the standard or reference into the corresponding value.

Completeness:

Population of missing data either by deriving values from other fields, or by pure enrichment (lookup on the internet, phoning, list purchases etc.)

Empty Fields, isn’t always a question about the user putting the data in being lazy, it could very well be that at the time the data was entered that particular data element was not available. If you get a list from a data vendor, the email may not be provided, but if the lead is valuable enough then you might want to call and get an opt-in for the email later. But before you make that call, you must of cause know if it is worthwhile.

In many cases, the missing data may be derived or extracted from other fields. It is not uncommon to e.g. find the Country value in the State field, or an international phone number prefix which can be used to identify a missing country value, or why not identify a state from an area code?

By extracting or performing lookups to populate missing fields with date from other parts of a record you may actually be able to assign e.g. a Lead to the correct territory or add the Lead to a campaign targets against e.g. Directors in Procurement.

Validity

Validation of data against reference data or standards

Validation of data is in comparison to Accuracy (which it the compliance to the Real-world) the check to see of a value or combination of values corresponds to a set of rules and standards.

Validation can simply be the quest of ensuring that values entered corresponds to the defined Picklist values defined for a field, to the more complex validation of an international phone number or address element.

Validation offers the value of identifying really bad records, records which will fail to comply to your business processes, and cause your team to waste time e.g. trying to call a number that doesn’t exists. Validation is your key element to find those records which have the biggest negative impact on your business processes, the trust in your data and thus user adoption.

Duplicates:

Deduplication of existing and new data is an important part of improving data quality.

This article will not address the issue of duplication or dupe prevention.

DataTrim provides 2 Apps, DataTrim Dupe Alerts and DataTrim Entry Check which addresses this element of improving Data Quality, and we refer to our website for more information about these. See also below.

Make Salesforce work for you!

Salesforce does have basic functionality which may help you improve your data quality .

By making fields which are necessary to support your business objectives required you force the users to populate this data when creating new records.

Validation Rules

Want to make sure that phone numbers follow a particular format? Set up validation rules for any field. Then, when records are saved, the data is checked to make sure that it follows the format. Validation rules are super versatile. For example, to make a standard field required, you can use a validation rule that checks to see if the field is blank. You set up validation rules for phone, credit card, and customer ID fields. You also set up validation rules to make standard lead contact info fields required.

Workflow Rules

Workflow rules are the magic wand in your Salesforce implementation. Workflow rules let you automate standard internal procedures and processes to save time across your company. You set up workflow rules so that leads are routed to the nearest rep. You do the same to assign service requests, too.

Critics: Although the use of these basic mechanism only can be recommended; they also should come with a warning. Making too many fields required and or too strict validation rules will block a lot of useful changes to your data. If a user is prevented to enter a new contact person, just because he doesn’t have the persons email, it will cause frustration in those cases where the user considers this person to be an important decisionmaker e.g. as he/she was at the end of the table during the last sales meeting.

Also, when you get external data, from e.g. a show, a list purchase, an acquisition etc. data doesn’t always follow your data model, and so data may be incomplete, but by rejecting these records through validation rules and required fields, you may miss out on some potential valuable new company and contact records.

So sometimes it is better to let the data enter your system, let you categorize it by identifying the Data Quality issue(s) and then improve the data on the fly.

Trialhead: https://trailhead.salesforce.com/en/content/learn/modules/data_quality/data_quality_improve_quality

Help: Require Field Input to Ensure Data Quality

Help: Validation Rules

Improving Data Quality with DataTrim -Your Data Laundry

The DataTrim Data Laundry App, is a Data Quality and Data Cleaning App for salesforce.

The App, can clean individual records as well as segments (like a list of newly imported records) in batch and in scheduled daily or weekly processes.

The App, contains reference data for data analysis, Standardization and Normalization, with multi-lingual, world-wide data recognition functionality for more than 50 countries including all European countries, USA, Canada and Australia.

Consistency through Standardization, Normalization

To create consistency, simply take a look at your list of Countries and States, I’m sure you are going to find numerous variations, dummy values and misspellings. These as well as other trivial or less trivial corrections can be addressed using referencing and data recognition algorithms.

The DataTrim Data Laundry comes with an extensive set of reference data packed into the App, which will take care of the majority of these cleanings across your critical business data fields, and allows you to amend this reference data in case your data contain variations which currently are not addressed by our algorithms.

Validation

Validation of data against standards or reference data in the DataTrim Data Laundry, will in addition to the actual correction by the Standardization and Normalization mentioned above, help highlight records where there is something more which is wrong. A Postcode could be Canadian, but the Country is set to US – An example where the data is complete and consistent, but seen in combination the data is invalid and thus useless.

Validation is a powerful mean to find out which records are usable for various business processes (territory assignment, telemarketing, emailing etc.),

Records which fails to be validated, are often (if they represent) Business Critial records, the records where you must spend more resources in verification and enrichment, via the web, external data sources, phone call etc.

Records or Fields which fail to be validated is also often a common indicator for “dummy” records, people trying to be anonymous on your webforms.

Validation will thus help identify bad records to purge or as an indicator for you not to waste resources on it.

The DataTrim Data Laundry performs validation across your critical business data fields like: City, Postal code, State, Country, Name, Email, phone and Title.

Job Title Standardization

Job Title is one of the most complex data elements which we all would like to collect in order to understand if we are talking to a decision maker, an influencer or a gate keeper. But the Job Title is also like a signature, not many are the same. Well of cause ? there is the CEO and the CFO, but how would you make sure you have the “CEOs” of your companies in Germany or France, where the titles are not English.

When segmenting and targeting your campaigns according to Title, you often end up with a nightmare trying to go through selecting the long list of Titles representing your target group, or risk leaving our a large number of leads simply because you didn’t know that ” geschäftsführer” actually is the German word for “CEO”.

To effectively use the Job Title values to anything, they need to be coded into something more structured like picklist values which you can use in your reports etc.

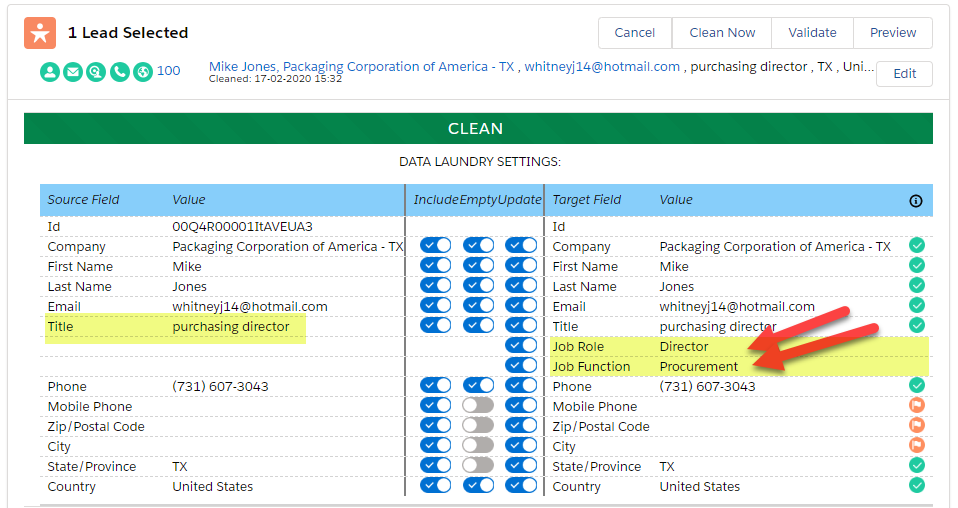

The DataTrim Data Laundry contains 2 fields, Job Role and Job Function plus an algorithm which can analyze a Job Title, and map the corresponding Job Role and Job Function.

Job Role indicates what Role the person has, i.e. Director, Manager, Assistant etc.

Job Function indicates in which part of the organization the person works, i.e. Finance, Sales, Procurement etc.

By combining thee 2 values you can segment your database into very specific target segments and get your targeted messages out to the right people.

The DataTrim Data Laundry is an App for Improving Data Quality, and it comes with a set of reference data built-in. Capable of detecting and mapping job titles in different languages. These reference data sets can be modified by you in case you are working in specific industries where our reference data still have shortcomings ?

Deduplication

DataTrim provides a separate App: DataTrim Dupe Alerts which addresses the deduplication of data in salesforce. See more here:

Dupe Prevention

DataTrim provides a separate App: DataTrim Entry Check which support the record creation process in salesforce by enforcing a fuzzy search, so that duplicate record creation can be prevented. See more here:

Cleaning using external sources or tools

As mentioned above, not everything can be cleaned by automation, but when you have a tool which can identify which records you need to review and treat then you have already come a long way. There is nothing as depressing to look at 1000’s of data to clean with no indicator of what is wrong with which data.

You can create list views in salesforce and use the inline table editor to quickly apply changes to the records, but if you really have 1000’s of records this may be a long lonesome road to take.

This is why the DataTrim Data Laundry lets you clean the data in Preview Mode. The Preview Mode will allow you to get an extract of your data, with a long list of Error Codes which for each record will tell you what the issue with this record is and thus what needs to be done to clean it.

The extract is available in csv format for you to load into MS-Excel or Google Sheets etc. where you can perform the updates in a structured form and then simply update the records in salesforce using the standard tools like Data Loader or similar.

Conclusion

Although the road to Improving Data Quality may seems long and complex involving multiple strategies and initiatives, a few simple steps may get you pretty far down the road if you make the right priorities at the beginning and focus your resources where it really matters.

“Sometimes the road less travelled is less travelled for a reason.”

– Jerry Seinfeld

At DataTrim…

We focus on providing cost effective solutions which will help you put your resources where they are needed and let automation take care of the more common stuff.